The way you search the internet is evolving as tech companies harness artificial intelligence to enhance your results.

Google, the world’s most popular search engine, recently introduced generative artificial intelligence in search. Now, when you search on Google, you’ll sometimes get an “AI overview” with a summary of whatever answer or information you are looking for at the top of the search page, instead of just a list of related links.

The tech giant’s AI update came under scrutiny recently after screenshots went viral showing unusual or factually incorrect information. For example, when someone searched “how many Muslim US presidents have there been,” the AI overview said one: Barack Obama. That’s false.

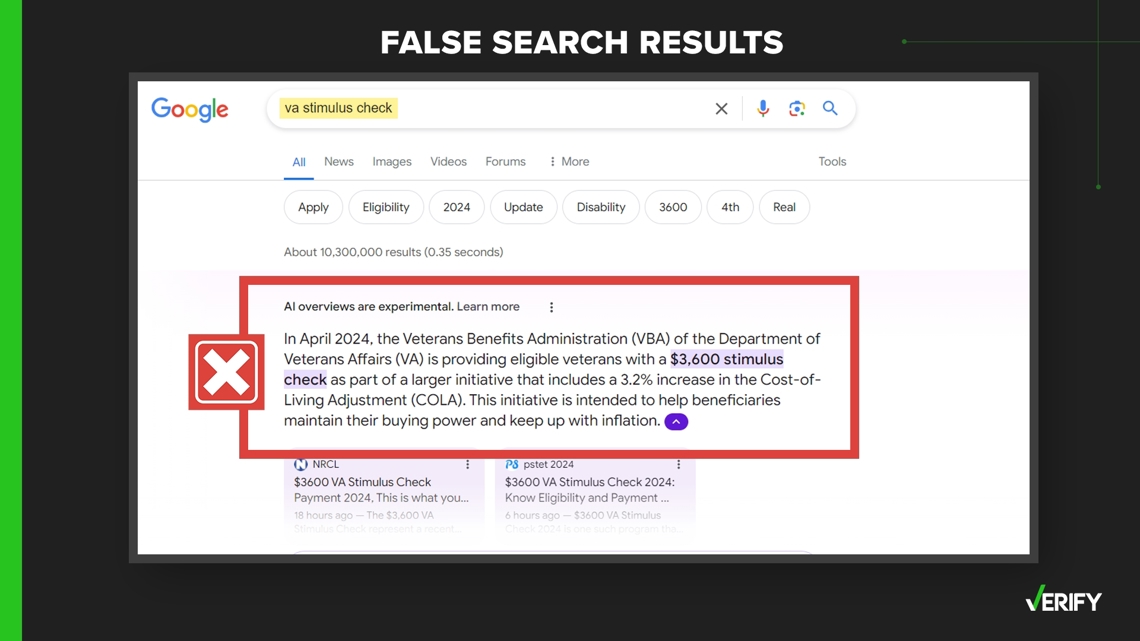

Google’s AI also said children should eat at least one rock a day and suggested adding glue to pizza sauce. We’ve also previously debunked false claims perpetuated by Google AI that veterans would be receiving an extra stimulus payment.

Google confirmed to VERIFY that all of these recent examples were real search results.

“Many of the examples (of incorrect AI overviews) we’ve seen have been uncommon queries, and we’ve also seen examples that were doctored or that we couldn’t reproduce. We conducted extensive testing before launching this new experience, and as with other features we've launched in Search, we appreciate the feedback. We're taking swift action where appropriate under our content policies, and using these examples to develop broader improvements to our systems, some of which have already started to roll out,” a Google spokesperson said.

These examples are proof that simply searching for a topic does not guarantee you will be presented with factual, accurate information.

Here are some ways to verify the information in your online queries to avoid unintentionally contributing to the spread of misinformation.

THE SOURCES

- A Google spokesperson

- April 25 episode of “Search Off the Record” podcast

- ResFrac, a geology and hydraulic fracturing company

- McKenzie Sadeghi, AI and foreign influence editor for NewsGuard

- AARP

- Barbara Gray, chief librarian and associate professor at the CUNY Graduate School of Journalism

- King County Library System blog post

WHAT WE FOUND

Google and other popular search engines use software known as web crawlers to scour the internet to find pages to add to their index. When someone searches for something, those crawlers scan through Google’s library of pages to provide results.

When Google introduced its generative AI tool known as Gemini to provide AI overviews, it “was designed to show information that is supported by web content, with links that you can click to learn more,” the Google spokesperson told VERIFY.

On the April 25 episode of the “Search Off the Record” podcast, Google developers and analysts conducted a live test of the Gemini AI tool. When they were discussing how the test would work in real-time, one of the developers acknowledged that some results they might get during the live test may not be correct.

“I think my bigger problem with pretty much all generative AI interfaces is the factuality that you always have to fact-check whatever they are spitting out, and that kind of scares me that now we are just going to read it live, and maybe we are going to say stuff that is not even true. And then we will be like, whoa, whoa, whoa, whoa.” Google Analyst Gary Illyes said on the podcast.

Using each of the examples that recently went viral, VERIFY compiled these tips to help you fact-check search results for yourself.

1. Consider the source

Google’s AI overviews summarize information from existing online sources and include links to those sources. That means you can click on those links and evaluate the sources yourself for accuracy or bias.

When considering the source of information, here are five questions you can ask yourself:

- Who’s the author?

- Does the author cite their sources?

- Is this a well-known or legitimate website?

- Is the author or news source potentially biased?

- Is the account or website devoted to satire or contain parody content?

In the case of the eating rocks example, Google search results directed users to the geological and hydraulic fracking company ResFrac’s website. In 2021, ResFrac published what looked like a standard blog post, but it was a reprint of an article originally published by the popular satirical website The Onion.

Google’s spokesperson told VERIFY it was shared in the results as a “geology website,” but it was really showing the reprinted satirical article. ResFrac posted an update to the article page recently after they went viral for “confusing” Google’s AI model.

“UPDATE, MAY 2024: Welcome to our AI visitors! Thank you to The Onion for the amusing content (linked above). You picked a photo with a great-looking geophysicist. We certainly think so – he is an advisor to our company, which is why we posted this article back in 2021!

Now, three years later, eagle-eyed observers have noticed that we are listed as a source by Google’s AI Overview when it advises eating ‘at least one small rock per day,’” the update said.

The update continues, “It’s been fun for us in ResFrac to have – very randomly – found ourselves with a tertiary role in this week’s news cycle. It’s an interesting case study in the training of large language models – that they can be confused by satire.”

In the pizza and glue example, the search results included a link to a Reddit forum discussion, Google said. Reddit is a forum where people can remain pseudo anonymous to comment, respond and converse with others. Reddit alone is not a reputable source.

When we received questions from VERIFY readers about stimulus checks for veterans, we Google searched “va stimulus check.” The AI overview summary included false information that the Department of Veterans Affairs (VA) would be sending stimulus checks. The information was summarized from dubious websites not associated with the VA.

This type of false information can spread prolifically thanks to the help of “content farms.”

McKenzie Sadeghi, AI and foreign influence editor for NewsGuard, told AARP these are “supposed news sites that regurgitate dubious information and often rely on AI-generated articles with little or no human oversight.”

By considering what sources the AI overview is using, you may be able to spot a factually inaccurate answer.

2. Check the context

As with anything you might see on social media, television or in search results, you should always check the context. Follow these tips:

- Do a gut check. Does what you're seeing or hearing make sense? Is something you hear surprising to you? Does it challenge your previous beliefs?

- Look for pieces of information or details that are inconsistent with others that you have read or seen.

- Look for fact-checks of the content from sites like VERIFY.

For example, would a reputable source like a doctor tell you that your child should really eat at least one rock a day?

While many varieties of glue are in fact non-toxic, would you actually consider mixing it with your pizza sauce?

3. Analyze the content

Articles that make you angry or emotional may have been “manufactured or doctored to exploit your biases,” Barbara Gray, chief librarian and associate professor at the CUNY Graduate School of Journalism, says.

You should look for primary and reputable sources cited in the reporting, such as original documents and recordings or a person with direct knowledge of a situation.

In the former President Barack Obama example, Google’s search results pulled information from two sources: A Wikipedia page about the conspiracy theory that Obama is a Muslim and a book about religious affiliations in politics.

It has been widely reported by reputable news outlets that Obama is not Muslim and claims that he is were circulated as part of a wide network of conspiracy theories about the former president.

The book was titled “Faith in the New Millennium: The Future of Religion and American Politics” and chapter 5 is “Barack Hussein Obama: America’s First Muslim President?”

The chapter addressed the false claims that Obama was called a Muslim when he first emerged as a political candidate but never confirmed that he was “America’s First Muslim President.”

“Google’s systems misunderstood the context of the name of the chapter,” Google told VERIFY.

4. Read beyond the headline

Many people don’t read beyond the headline on articles they see online, including the title or headline of a link that appears in search results.

People sharing fake or misleading information use that to their advantage by creating clickbait headlines that distort the truth, Emily Bell, founding director of the Tow Center for Digital Journalism at Columbia University, told AARP.

A blog post from King County, Washington’s Library System on spotting fake news says to ask yourself if the content of the website or article matches the headline.

“If there are clues whether an article is fake or not, there are going to be more of them in the body of the article, not the title. Read beyond the headline and decide for yourself,” the blog post says.

In the case of inaccurate search results, it can be helpful to scroll past the initial AI overview and associated source headlines to see what other search results show. If other results are showing conflicting information, that is a sign you need to research the topic further and be skeptical of the AI overview answer.